# Documents

Every record you index in Typesense is called a Document.

# Index documents

A document to be indexed in a given collection must conform to the schema of the collection.

If the document contains an id field of type string, Typesense will use that field as the identifier for the document.

Otherwise, Typesense will assign an auto-generated identifier to the document.

NOTE

The id should not include spaces or any other characters that require encoding in urls (opens new window).

# Index a single document

If you need to index a document in response to some user action in your application, you can use the single document create endpoint.

If you need to index multiple documents at a time, we highly recommend using the import documents endpoint, which is optimized for bulk imports. For eg: If you have 100 documents, indexing them using the import endpoint at once will be much more performant than indexing documents one a time.

# Upsert a single document

The endpoint will replace a document with the same id if it already exists, or create a new document if one doesn't already exist with the same id.

If you need to upsert multiple documents at a time, we highly recommend using the import documents endpoint with action=upsert, which is optimized for bulk upserts.

For eg: If you have 100 documents, upserting them using the import endpoint at once will be much more performant than upserting documents one a time.

Sample Response

Definition

POST ${TYPESENSE_HOST}/collections/:collection/documents

# Index multiple documents

You can index multiple documents in a batch using the import API.

When indexing multiple documents, this endpoint is much more performant, than calling the single document create endpoint multiple times in quick succession.

The documents to import need to be formatted as a newline delimited JSON string, aka JSONLines (opens new window) format. This is essentially one JSON object per line, without commas between documents. For example, here are a set of 3 documents represented in JSONL format.

{"id": "124", "company_name": "Stark Industries", "num_employees": 5215, "country": "US"}

{"id": "125", "company_name": "Future Technology", "num_employees": 1232, "country": "UK"}

{"id": "126", "company_name": "Random Corp.", "num_employees": 531, "country": "AU"}

If you are using one of our client libraries, you can also pass in an array of documents and the library will take care of converting it into JSONL.

You can also convert from CSV to JSONL and JSON to JSONL before importing to Typesense.

# Action modes (Batch create, upsert & update)

Besides batch-creating documents, you can also use the ?action query parameter to batch-upsert and batch-update documents.

| create (default) | Creates a new document. Fails if a document with the same id already exists |

| upsert | Creates a new document or updates an existing document if a document with the same id already exists.

Requires the whole document to be sent. For partial updates, use the update action below. |

| update | Updates an existing document. Fails if a document with the given id does not exist. You can send a partial document containing only the fields that are to be updated. |

Definition

POST ${TYPESENSE_HOST}/collections/:collection/documents/import

Sample Response

Each line of the response indicates the result of each document present in the request body (in the same order). If the import of a single document fails, it does not affect the other documents.

If there is a failure, the response line will include a corresponding error message and as well as the actual document content. For example, the second document had an import failure in the following response:

NOTE

The import endpoint will always return a HTTP 200 OK code, regardless of the import results of the individual documents.

We do this because there might be some documents which succeeded on import and others that failed, and we don't want to return an HTTP error code in those partial scenarios. To keep it consistent, we just return HTTP 200 in all cases.

So always be sure to check the API response for any {success: false, ...} records to see if there are any documents that failed import.

# Configure batch size

By default, Typesense ingests 40 documents at a time into Typesense - after every 40 documents are ingested, Typesense will then service the search request queue, before switching back to imports.

To increase this value, use the batch_size parameter.

Note that this parameter controls server-side batching of documents sent in a single import API call. You can also do client-side batching, by sending your documents over multiple import API calls (potentially in parallel).

NOTE: Larger batch sizes will consume larger transient memory during import.

# Dealing with Dirty Data

The dirty_values parameter determines what Typesense should do when the type of a particular field being

indexed does not match the previously inferred type for that field, or the one defined in the collection's schema.

This parameter can be sent with any of the document write API endpoints, for both single documents and multiple documents.

| Value | Behavior |

|---|---|

coerce_or_reject | Attempt coercion of the field's value to previously inferred type. If coercion fails, reject the write outright with an error message. |

coerce_or_drop | Attempt coercion of the field's value to previously inferred type. If coercion fails, drop the particular field and index the rest of the document. |

drop | Drop the particular field and index the rest of the document. |

reject | Reject the document outright. |

Default behaviour

If a wildcard (.*) field is defined in the schema or if the schema contains any field

name with a regular expression (e.g a field named .*_name), the default behavior is coerce_or_reject. Otherwise,

the default behavior is reject (this ensures backward compatibility with older Typesense versions).

# Indexing a document with dirty data

Let's now attempt to index a document with a title field that contains an integer. We will assume that this

field was previously inferred to be of type string. Let's use the coerce_or_reject behavior here:

Similarly, we can use the dirty_values parameter for the update, upsert and import operations as well.

# Indexing all values as string

Typesense provides a convenient way to store all fields as strings through the use of the string* field type.

Defining a type as string* allows Typesense to accept both singular and multi-value/array values.

Let's say we want to ingest data from multiple devices but want to store them as strings since each device could

be using a different data type for the same field name (e.g. one device could send an record_id as an integer,

while another device could send an record_id as a string).

To do that, we can define a schema as follows:

{

"name": "device_data",

"fields": [

{"name": ".*", "type": "string*" }

]

}

Now, Typesense will automatically convert any single/multi-valued data into their corresponding string

representations automatically when data is indexed with the dirty_values: "coerce_or_reject" mode.

You can see how they will be transformed below:

# Import a JSONL file

You can import a JSONL file or you can import the output of a Typesense export operation directly as import to the import end-point since both use JSONL.

Here's an example file:

You can import the above documents.jsonl file like this.

# Import a JSON file

If you have a file in JSON format, you can convert it into JSONL format using jq (opens new window):

jq -c '.[]' documents.json > documents.jsonl

Once you have the JSONL file, you can then import it following the instructions above to import a JSONL file.

# Import a CSV file

If you have a CSV file with column headers, you can convert it into JSONL format using mlr (opens new window):

mlr --icsv --ojsonl cat documents.csv > documents.jsonl

Once you have the JSONL file, you can then import it following the instructions above to import a JSONL file.

# Import other file types

Typesense is primarily a JSON store, optimized for fast search. So if you can extract data from other file types and convert it into structured JSON, you can import it into Typesense and search through it.

For eg, here's one library you can use to convert DOCX files to JSON (opens new window). Once you've extracted the JSON, you can then index them in Typesense just like any other JSON file.

In general, you want to find libraries to convert your file type to JSON, and index that JSON into Typesense to search through it.

# Search

In Typesense, a search consists of a query against one or more text fields and a list of filters against numerical or facet fields. You can also sort and facet your results.

Sample Response

When a string[] field is queried, the highlights structure will include the corresponding matching array indices of the snippets. For e.g:

# Search Parameters

# Query parameters

| Parameter | Required | Description |

|---|---|---|

| q | yes | The query text to search for in the collection. Use * as the search string to return all documents. This is typically useful when used in conjunction with filter_by.For example, to return all documents that match a filter, use: q=*&filter_by=num_employees:10.To exclude words in your query explicitly, prefix the word with the - operator, e.g. q: 'electric car -tesla'. |

| query_by | yes | One or more string / string[] fields that should be queried against. Separate multiple fields with a comma: company_name, countryThe order of the fields is important: a record that matches on a field earlier in the list is considered more relevant than a record matched on a field later in the list. So, in the example above, documents that match on the company_name field are ranked above documents matched on the country field. |

| filter_by | no | Filter conditions for refining your search results. A field can be matched against one or more values. Examples: - country: USA- country: [USA, UK] returns documents that have country of USA OR UK.Exact Filtering: To match a string field exactly, you can use the := operator. For eg: category:= Shoe will match documents from the category shoes and not from a category like shoe rack.Escaping Commas: You can also filter using multiple values and use the backtick character to denote a string literal: category:= [`Running Shoes, Men`, Sneaker].Negation: Not equals / negation is supported for string and boolean facet fields, e.g. filter_by=author:!= JK RowlingNumeric Filtering: Filter documents with numeric values between a min and max value, using the range operator [min..max] or using simple comparison operators >, >= <, <=, =. Examples: - num_employees:[10..100]- num_employees:<40Multiple Conditions: Separate multiple conditions with the && operator.Examples: - num_employees:>100 && country: [USA, UK]- categories:=Shoes && categories:=OutdoorTo do ORs across different fields (eg: Color is blue OR category is Shoe), you want to split each condition into separate queries in a multi-query request and then aggregate the text match scores across requests. Filtering Arrays: filter_by can be used with array fields as well. For eg: If genres is a string[] field: - genres:=[Rock, Pop] will return documents where the genres array field contains Rock OR Pop. - genres:=Rock && genres:=Acoustic will return documents where the genres array field contains both Rock AND Acoustic. |

| prefix | no | Indicates that the last word in the query should be treated as a prefix, and not as a whole word. This is necessary for building autocomplete and instant search interfaces. Set this to false to disable prefix searching for all queried fields. You can also control the behavior of prefix search on a per field basis. For example, if you are querying 3 fields and want to enable prefix searching only on the first field, use ?prefix=true,false,false. The order should match the order of fields in query_by. If a single value is specified for prefix the same value is used for all fields specified in query_by.Default: true (prefix searching is enabled for all fields). |

| pre_segmented_query | no | Set this parameter to true if you wish to split the search query into space separated words yourself. When set to true, we will only split the search query by space, instead of using the locale-aware, built-in tokenizer.Default: false |

# Faceting parameters

| Parameter | Required | Description |

|---|---|---|

| facet_by | no | A list of fields that will be used for faceting your results on. Separate multiple fields with a comma. |

| max_facet_values | no | Maximum number of facet values to be returned. Default: 10 |

| facet_query | no | Facet values that are returned can now be filtered via this parameter. The matching facet text is also highlighted. For example, when faceting by category, you can set facet_query=category:shoe to return only facet values that contain the prefix "shoe". |

# Pagination parameters

| Parameter | Required | Description |

|---|---|---|

| page | no | Results from this specific page number would be fetched. Page numbers start at 1 for the first page.Default: 1 |

| per_page | no | Number of results to fetch per page. When group_by is used, per_page refers to the number of groups to fetch per page, in order to properly preserve pagination. Default: 10 NOTE: Only upto 250 hits (or groups of hits when using group_by) can be fetched per page. |

# Grouping parameters

| Parameter | Required | Description |

|---|---|---|

| group_by | no | You can aggregate search results into groups or buckets by specify one or more group_by fields. Separate multiple fields with a comma.NOTE: To group on a particular field, it must be a faceted field. E.g. group_by=country,company_name |

| group_limit | no | Maximum number of hits to be returned for every group. If the group_limit is set as K then only the top K hits in each group are returned in the response.Default: 3 |

# Results parameters

| Parameter | Required | Description |

|---|---|---|

| include_fields | no | Comma-separated list of fields from the document to include in the search result. |

| exclude_fields | no | Comma-separated list of fields from the document to exclude in the search result. |

| highlight_fields | no | Comma separated list of fields that should be highlighted with snippetting. You can use this parameter to highlight fields that you don't query for, as well. Default: all queried fields will be highlighted. |

| highlight_full_fields | no | Comma separated list of fields which should be highlighted fully without snippeting. Default: all fields will be snippeted. |

| highlight_affix_num_tokens | no | The number of tokens that should surround the highlighted text on each side. This controls the length of the snippet. Default: 4 |

| highlight_start_tag | no | The start tag used for the highlighted snippets. Default: <mark> |

| highlight_end_tag | no | The end tag used for the highlighted snippets. Default: </mark> |

| snippet_threshold | no | Field values under this length will be fully highlighted, instead of showing a snippet of relevant portion. Default: 30 |

| limit_hits | no | Maximum number of hits that can be fetched from the collection. Eg: 200page * per_page should be less than this number for the search request to return results.Default: no limit You'd typically want to generate a scoped API key with this parameter embedded and use that API key to perform the search, so it's automatically applied and can't be changed at search time. |

| search_cutoff_ms | no | Typesense will attempt to return results early if the cutoff time has elapsed. This is not a strict guarantee and facet computation is not bound by this parameter. Default: no search cutoff happens. |

| exhaustive_search | no | Setting this to true will make Typesense consider all variations of prefixes and typo corrections of the words in the query exhaustively, without stopping early when enough results are found (drop_tokens_threshold and typo_tokens_threshold configurations are ignored). Default: false |

# Caching parameters

| Parameter | Required | Description |

|---|---|---|

| use_cache | no | Enable server side caching of search query results. By default, caching is disabled. Default: false |

| cache_ttl | no | The duration (in seconds) that determines how long the search query is cached. This value can only be set as part of a scoped API key. Default: 60 |

# Typo-Tolerance parameters

| Parameter | Required | Description |

|---|---|---|

| num_typos | no | Maximum number of typographical errors (0, 1 or 2) that would be tolerated. Damerau–Levenshtein distance (opens new window) is used to calculate the number of errors. You can also control num_typos on a per field basis. For example, if you are querying 3 fields and want to disable typo tolerance on the first field, use ?num_typos=0,1,1. The order should match the order of fields in query_by. If a single value is specified for num_typos the same value is used for all fields specified in query_by. Default: 2 (num_typos is 2 for all fields specified in query_by). |

| min_len_1typo | no | Minimum word length for 1-typo correction to be applied. The value of num_typos is still treated as the maximum allowed typos. Default: 4. |

| min_len_2typo | no | Minimum word length for 2-typo correction to be applied. The value of num_typos is still treated as the maximum allowed typos. Default: 7. |

| typo_tokens_threshold | no | If at least typo_tokens_threshold number of results are not found for a specific query, Typesense will attempt to look for results with more typos until num_typos is reached or enough results are found. Set typo_tokens_threshold to 0 to disable typo tolerance.Default: 1 |

| drop_tokens_threshold | no | If at least drop_tokens_threshold number of results are not found for a specific query, Typesense will attempt to drop tokens (words) in the query until enough results are found. Tokens that have the least individual hits are dropped first. Set drop_tokens_threshold to 0 to disable dropping of tokens.Default: 1 |

# Ranking parameters

| Parameter | Required | Description |

|---|---|---|

| query_by_weights | no | The relative weight to give each query_by field when ranking results. Values can be between 0 and 127. This can be used to boost fields in priority, when looking for matches.Separate each weight with a comma, in the same order as the query_by fields. For eg: query_by_weights: 1,1,2 with query_by: field_a,field_b,field_c will give equal weightage to field_a and field_b, and will give twice the weightage to field_c comparatively.Default: If no explicit weights are provided, fields earlier in the query_by list will be considered to have greater weight. |

| sort_by | no | A list of numerical fields and their corresponding sort orders that will be used for ordering your results. Separate multiple fields with a comma. Up to 3 sort fields can be specified in a single search query, and they'll be used as a tie-breaker - if the first value in the first sort_by field ties for a set of documents, the value in the second sort_by field is used to break the tie, and if that also ties, the value in the 3rd field is used to break the tie between documents. If all 3 fields tie, the document insertion order is used to break the final tie.E.g. num_employees:desc,year_started:ascThe text similarity score is exposed as a special _text_match field that you can use in the list of sorting fields.If one or two sorting fields are specified, _text_match is used for tie breaking, as the last sorting field.Default: If no sort_by parameter is specified, results are sorted by: _text_match:desc,default_sorting_field:desc.GeoSort: When using GeoSearch, documents can be sorted around a given lat/long using location_field_name(48.853, 2.344):asc. You can also sort by additional fields within a radius. Read more here. |

| prioritize_exact_match | no | By default, Typesense prioritizes documents whose field value matches exactly with the query. Set this parameter to false to disable this behavior. Default: true |

| pinned_hits | no | A list of records to unconditionally include in the search results at specific positions. An example use case would be to feature or promote certain items on the top of search results. A comma separated list of record_id:hit_position. Eg: to include a record with ID 123 at Position 1 and another record with ID 456 at Position 5, you'd specify 123:1,456:5.You could also use the Overrides feature to override search results based on rules. Overrides are applied first, followed by pinned_hits and finally hidden_hits. |

| hidden_hits | no | A list of records to unconditionally hide from search results. A comma separated list of record_ids to hide. Eg: to hide records with IDs 123 and 456, you'd specify 123,456.You could also use the Overrides feature to override search results based on rules. Overrides are applied first, followed by pinned_hits and finally hidden_hits. |

| enable_overrides | no | If you have some overrides defined but want to disable all of them for a particular search query, set enable_overrides to false. Default: true |

# Filter Results

You can use the filter_by search parameter to filter results by a particular value(s) or logical expressions.

For eg: if you have dataset of movies, you can apply a filter to only return movies in a certain genre or published after a certain date, etc.

You'll find detailed documentation for filter_by in the Search Parameters table above.

# Facet Results

You can use the facet_by search parameter to have Typesense return aggregate counts of values for one or more fields.

For integer fields, Typesense will also return min, max, sum and average values, in addition to counts.

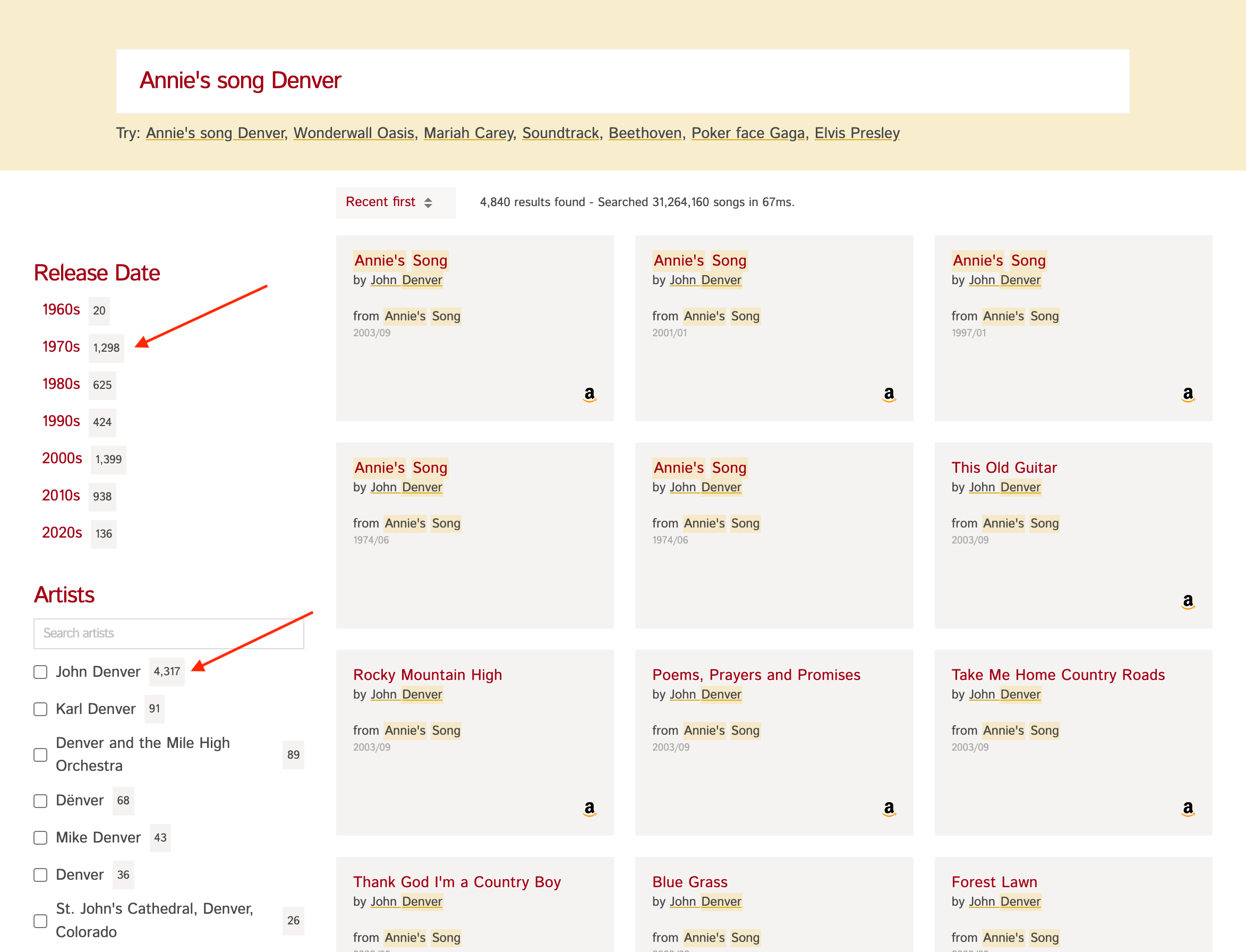

For eg: if you have a dataset of songs (opens new window) like in the screenshot below,

the count next to each of the "Release Dates" and "Artists" on the left is obtained by faceting on the release_date and artist fields.

This is useful to show users a summary of results, so they can refine the results further to get to what they're looking for efficiently.

Note that you need to enable faceting on a field using {fields: [{facet: true, name: "<field>", type: "<datatype>"}]} in the Collection Schema before using it in facet_by.

You'll find detailed documentation for facet_by in the Facet Parameters table above.

# Group Results

You can aggregate search results into groups or buckets by specify one or more group_by fields.

Grouping hits this way is useful in:

- Deduplication: By using one or more

group_byfields, you can consolidate items and remove duplicates in the search results. For example, if there are multiple shoes of the same size, by doing agroup_by=size&group_limit=1, you ensure that only a single shoe of each size is returned in the search results. - Correcting skew: When your results are dominated by documents of a particular type, you can use

group_byandgroup_limitto correct that skew. For example, if your search results for a query contains way too many documents of the same brand, you can do agroup_by=brand&group_limit=3to ensure that only the top 3 results of each brand is returned in the search results.

TIP

To group on a particular field, it must be a faceted field.

Grouping returns the hits in a nested structure, that's different from the plain JSON response format we saw earlier. Let's repeat the query we made earlier with a group_by parameter:

Definition

GET ${TYPESENSE_HOST}/collections/:collection/documents/search

# Pagination

You can use the page and per_page search parameters to control pagination of results.

By default, Typesense returns the top 10 results (page: 1, per_page: 10).

You'll find detailed documentation for these pagination parameters in the Pagination Parameters table above.

# Ranking

By default, Typesense ranks results by a text_match relevance score it calculates.

You can use various Search Parameters to influence the text match score, sort results by additional parameters and conditionally promote or hide results. Read more in the Ranking and Relevance Guide.

# Geosearch

Typesense supports geo search on fields containing latitude and longitude values, specified as the geopoint or geopoint[] field types.

Let's create a collection called places with a field called location of type geopoint.

Let's now index a document.

NOTE

Make sure to set the coordinates in the correct order: [Latitude, Longitude]. GeoJSON often uses [Longitude, Latitude] which is invalid!

# Searching within a Radius

We can now search for places within a given radius of a given latlong

(use mi for miles and km for kilometers) using the filter_by search parameter.

In addition, let's also sort the records that are closest to a given location (this location can be the same or different from the latlong used for filtering).

Sample Response

The above example uses "5.1 km" as the radius, but you can also use miles, e.g.

location:(48.90615915923891, 2.3435897727061175, 2 mi).

# Searching Within a Geo Polygon

You can also filter for documents within any arbitrary shaped polygon.

You want to specify the geo-points of the polygon as lat, lng pairs.

'filter_by' : 'location:(48.8662, 2.3255, 48.8581, 2.3209, 48.8561, 2.3448, 48.8641, 2.3469)'

# Sorting by Additional Attributes within a Radius

# exclude_radius

Sometimes, it's useful to sort nearby places within a radius based on another attribute like popularity, and then sort by distance outside this radius.

You can use the exclude_radius option for that.

'sort_by' : 'location(48.853, 2.344, exclude_radius: 2mi):asc, popularity:desc'

This makes all documents within a 2 mile radius to "tie" with the same value for distance.

To break the tie, these records will be sorted by the next field in the list popularity:desc.

Records outside the 2 mile radius are sorted first on their distance and then on popularity:desc as usual.

# precision

Similarly, you can bucket all geo points into "groups" using the precision parameter, so that all results within this group will have the same "geo distance score".

'sort_by' : 'location(48.853, 2.344, precision: 2mi):asc, popularity:desc'

This will bucket the results into 2-mile groups and force records within each bucket into a tie for "geo score", so that the popularity metric can be used to tie-break and sort results within each bucket.

# Federated / Multi Search

You can send multiple search requests in a single HTTP request, using the Multi-Search feature. This is especially useful to avoid round-trip network latencies incurred otherwise if each of these requests are sent in separate HTTP requests.

You can also use this feature to do a federated search across multiple collections in a single HTTP request.

For eg: in an ecommerce products dataset, you can show results from both a "products" collection, and a "brands" collection to the user, by searching them in parallel with a multi_search request.

Sample Response

Definition

POST ${TYPESENSE_HOST}/multi_search

# multi_search Parameters

You can use any of the Search Parameters here for each individual search operation within a multi_search request.

In addition, you can use the following parameters with multi_search requests:

| Parameter | Required | Description |

|---|---|---|

| limit_multi_searches | no | Max number of search requests that can be sent in a multi-search request. Eg: 20Default: 50You'd typically want to generate a scoped API key with this parameter embedded and use that API key to perform the search, so it's automatically applied and can't be changed at search time. |

TIP

The results array in a multi_search response is guaranteed to be in the same order as the queries you send in the searches array in your request.

# Retrieve a document

Fetch an individual document from a collection by using its id.

Sample Response

Definition

GET ${TYPESENSE_HOST}/collections/:collection/documents/:id

# Update a document

Update an individual document from a collection by using its id. The update can be partial, as shown below:

Sample Response

Definition

PATCH ${TYPESENSE_HOST}/collections/:collection/documents/:id

TIP

To update multiple documents, use the import endpoint with action=update or action=upsert.

# Delete documents

# Delete a single document

Delete an individual document from a collection by using its id.

Sample Response

Definition

DELETE ${TYPESENSE_HOST}/collections/:collection/documents/:id

# Delete by query

You can also delete a bunch of documents that match a specific filter_by condition:

Use the batch_size parameter to control the number of documents that should deleted at a time. A larger value will speed up deletions, but will impact performance of other operations running on the server.

Sample Response

Definition

DELETE ${TYPESENSE_HOST}/collections/:collection/documents?filter_by=X&batch_size=N

TIP

To delete multiple documents by ID, you can use filter_by=id: [id1, id2, id3].

To delete all documents in a collection, you can use a filter that matches all documents in your collection.

For eg, if you have an int32 field called popularity in your documents, you can use filter_by=popularity:>0 to delete all documents.

Or if you have a bool field called in_stock in your documents, you can use filter_by=in_stock:[true,false] to delete all documents.

# Export documents

Export documents in a collection in JSONL format.

Sample Response

While exporting, you can use the following parameters to control the result of the export:

| Parameter | Description |

|---|---|

| filter_by | Restrict the exports to documents that satisfies the filter query. |

| include_fields | List of fields that should be present in the exported documents. |

| exclude_fields | List of fields that should not be present in the exported documents. |

Definition

GET ${TYPESENSE_HOST}/collections/:collection/documents/export

← Collections API Keys →